I am the leader of the self-organizing-systems (SOS) group (whose other faculty members are Pinar Ozturk, Pauline Haddow and Jorn Hokland). Our main interest is the emergence of sophisticated, adaptive behavior in artificial intelligence systems, many of which have a strong modelling basis in contemporary neuroscience. In general, SOS members are interested in the modeling and simulation of a wide spectrum of emergent phenomena, and we support and advise student projects and master’s theses in many areas.

Two key tools of the trade in these areas are evolutionary algorithms (EAs) and artificial neural networks (ANNs), and we tend to support projects that involve either or both of them. However, our central research endeavor is understanding self-organization in natural and artificial neural networks and how this governs overt intelligent behavior.

At least three decentralized adaptive processes interact to sculpt the functioning brains of living organisms: evolution, development and learning. To understand the links between structure and function in the brains of most animals, from insects to humans, requires a detailed consideration of all three processes. We call these systems tri-adaptive.

My research involves the construction of these tri-adaptive systems in order to a) better understand the mechanisms of animal/human intelligence, and b) design effective artificial neural networks (ANNs) for both sensorimotoric tasks such as navigation and more cognitive tasks such as general problem solving.

Our central philosophy is that ANNs are the optimal representational medium for truly adaptive, human-like behavior, but their topological layouts and the properties of individual neurons and synapses (of which there are often tens or hundreds of thousands) cannot be pre-determined by human engineers in anything more than an ad-hoc manner. Even back-propagation, a key learning algorithm for ANNs, is restricted to completely-supervised, learning scenarios, which are extremely rare in the lives of both humans and automonous robots.

In short, the search space for complete ANN designs that can achieve sophisticated behavior is ominous. Nature has found several impressive points in this space via the evolution of a genetic material (DNA) that encodes both a simulated neurogenesis process and learning - via various forms of experience-based synaptic modification. Our biologically-inspired models follow a similar approach: evolutionary algorithms manipulate genomes that serve as recipes for both a) simulated neurogenesis to grow ANN topologies, and b) the learning mechanisms of individual synapses.

Unfortunately, the computational demands of tri-adaptive systems are enormous. Alone, each process can take hours or days of computer time. But in combination, the demands are multiplicative, not additive, since each evolutionary-algorithm individual must progress through a developmental and learning stage, the latter of which involves a simulated ”lifetime” or at least ”adolescence”.

My TRIDAP(2004) and BALKO(2010)systems consider tri-adaptive systems vis-a-vis the Baldwin Effect, wherein learning affects the course and speed of evolution. However, the learning system in TRIDAP is trivial in comparison to an ANN. One ubiquitous goal of my research is to scale up tri-adaptive approaches to neural networks that are complex enough to exhibit intelligent behavior in real and simulated robots.

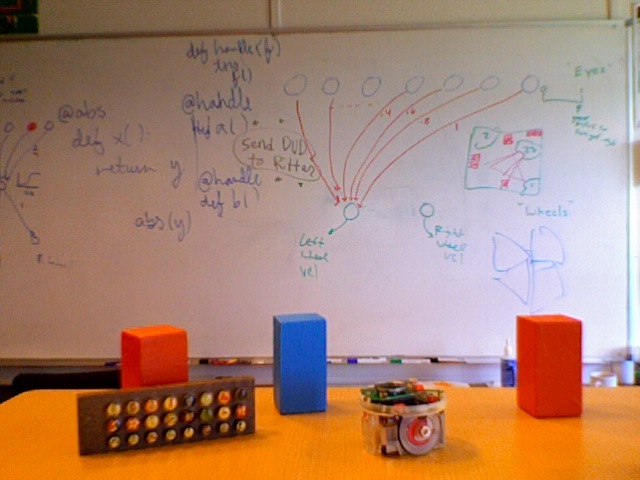

In 2009, two summer students (Kjeld Karim Berg Massali and Dag Henrik Fjaer) and I worked extensively with e-puck robots (pictured) in order to create a Python-based system for easily interacting with either a real or simulated e-puck. In 2010, another summer student (Dag Oyvind Tornes) extended that work with more elaborate computer vision such that the robots can now detect one another's visual signals. This sets the stage for work in swarm intelligence, which we hope to being in autumn 2010. In general, with our toolbox of Python modules growing, we hope that our AI researchers and students can soon begin to test their AI control strategies on e-pucks.

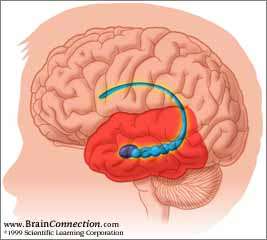

We have recently begun cooperating with NTNU's world-renowned Center for the Biology of Memory (CBM). Their discovery of grid cells in the hippocampus has spawned a widespread empirical and theoretical investigation into the possible function(s) of this intriguing type of cell/neuron. Since the hippocampus appears to be important for both a) spatial reasoning and navigation, and b) long-term, episodic memory formation, it could be a key link between sensorimotor- and higher-level-cognitive behaviors...and grid cells may play an important role in both.

This link between sensorimotor behavior and cognition is an underlying assumption of Situated and Embodied AI (SEAI), and as we gain a better understanding of how the brain integrates the two, we hope to incorporate those principles into neural-network-based controllers for robots. The PUCKER system allows us to test theories of the relationships between a) properties of individual neurons (such as grid cells), b) mechanisms of synaptic modification (that underlie a lot of learning in the brain) c) neural network topologies (such as those found in the hippocampus), and d) sensorimotor (and more cognitive) behavior (as observed in the real or simulated e-pucks). We will continue to develop PUCKER as both a research and teaching tool in the upcoming months.

Earlier (pre 2002) Research